Have you ever wondered how a machine can write a poem or create a photorealistic image from a simple text description? This is possible thanks to generative AI.

Basics of Generative AI

Generative AI (Generative Artificial Intelligence) refers to AI systems that can generate new, original content similar to that on which they have been trained. Data is not just interpreted, but recreated. In essence, AI systems learn patterns and structures from existing data and generate plausible variations – be it text, images, music, code or even videos. The focus is on creativity and generation, as opposed to pure analysis or prediction. To better understand this, we differentiate it from two other prominent types of AI.

Difference to other AI models

Discriminative AI

This model learns to differentiate between data points and assign them to categories. It therefore classifies or differentiates existing data. A classic example is a spam filter that decides whether an email is “spam” or “not spam”. It does not generate anything new, but makes a yes/no decision based on learned patterns.

Predictive AI

This AI makes predictions – in the sense of the Latin praedicere (to predict) – by evaluating historical data. It answers the question “What will happen next?”. Examples of this are weather forecasts or the prediction of share prices. Here too, no new content is generated, only probabilities and estimates.

Generative AI

This model, on the other hand, learns the underlying patterns and structures in a data set so well that it can generate completely new but plausible examples from them. It answers the question: “What would a new example look like that fits this data?”.

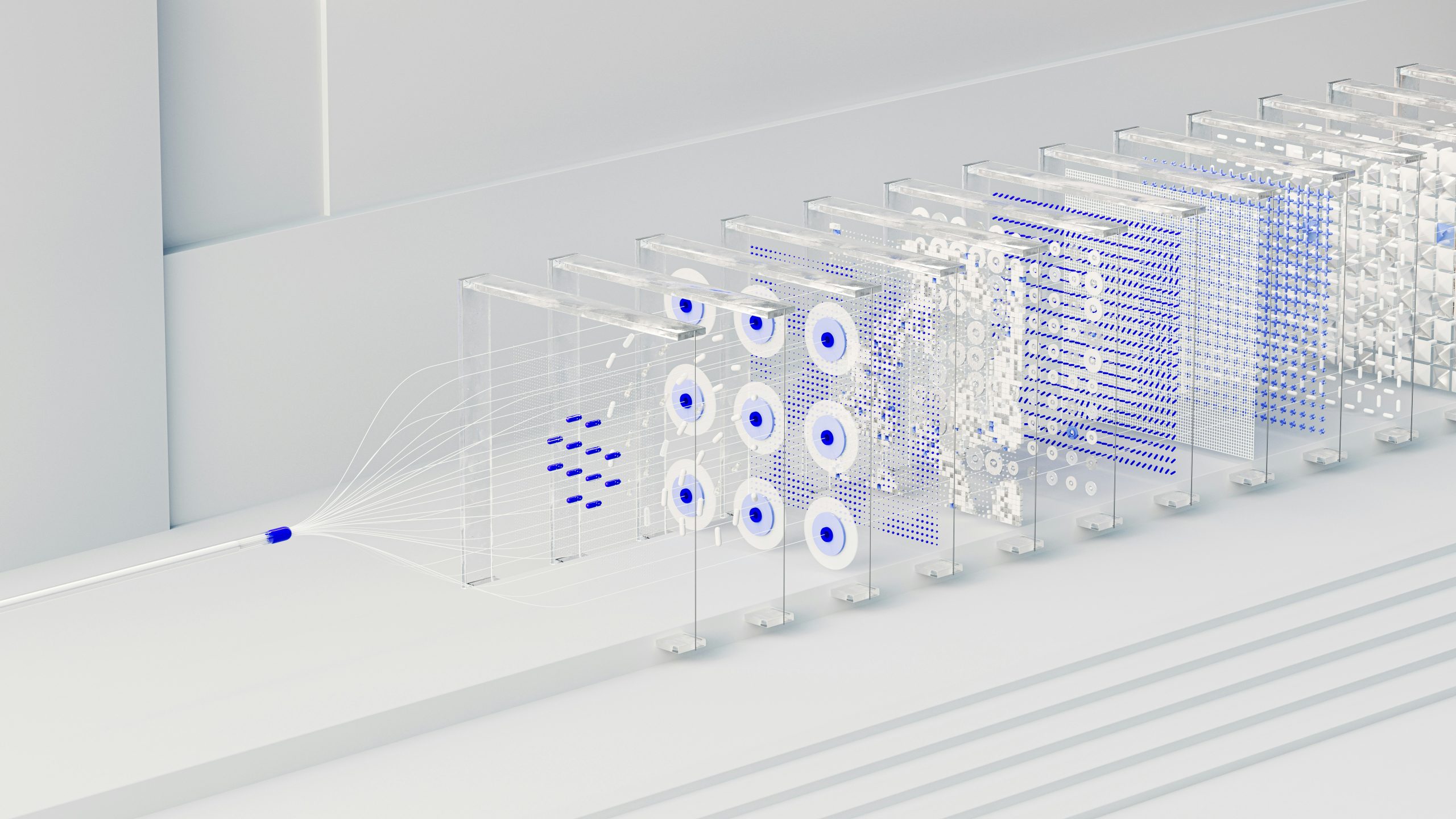

Core technologies of generative AI

There are several established approaches that vary depending on the application domain (e.g. text vs. images). The most common are

Generative Adversarial Networks (GANs)

GANs consist of two neural networks that “compete” against each other: the generator and the discriminator. Imagine an art forger (generator) trying to paint a perfect duplicate of a Rembrandt, and an art detective (discriminator) who has to distinguish real from fake paintings.

- The generator creates synthetic data. In our example, it is a duplicate that is intended to look real.

- The discriminator evaluates (from the training data set) whether the Rembrandt is genuine or fake.

The generator uses this evaluation or feedback to improve its next forgery. Through

Diffusion models

Diffusion models, as described in

stable diffusion

or

Midjourney

take a different approach. They generate new data, such as images or sounds, by reversing a step-by-step process that consists of noise. So imagine you want an AI to draw a picture of a cat. The two-step process of diffusion looks like this.

The forward process (add noise)

First, the model learns how to gradually transform a clear image into pure noise (like the “snow flurries” on an old TV). It takes a real image – say, a cat – and adds more and more random noise in many small steps until nothing of the original image is recognizable. The model repeats this process thousands of times to develop a deep understanding of how an image dissolves into noise.

The reverse process (remove noise)

This is the actual creative part. The model learns to reverse this process. It starts with an image that consists only of random noise and then gradually removes this noise until a clear, new image emerges. Because it has learned in training how noise is added to an image, it can now predict how to remove the noise to reveal a believable image. If you tell it to “create a cat”, it will guide this de-noising process so that in the end an image of a cat appears that it has never seen before.

Transformer architectures (GPT)

Transformer models are the basis of generative language models such as GPT (Generative Pre-trained Transformer). These models generate new text by learning how language works.

To understand how these models learn language and create new content based on it, let’s take a look at their inner mechanisms. The process starts with the way they fundamentally process text and ends with the way they weigh word meanings in context. This leads us directly to the following key questions:

What are tokens?

Text is broken down into small building blocks called “tokens”. These can be whole

What is self-attention?

It’s like a radar that the model activates for each token. It looks

How does it generate content?

The AI predicts one word (or token) after the other, based on what is already there. This creates a flowing, meaningful text – for example, it starts with “Tell me a story” and builds on it word by word without losing the thread.

Generative AI training

The path to high-performance generative language models is associated with an enormous demand for data and computing power. Training these models is so resource-intensive that it pushes the limits of today’s supercomputers and raises significant ecological questions. This challenge is driving research to develop more sustainable and efficient methods.

Data volumes and computing resources

Generative models are usually trained unsupervised or self-supervised. They analyze huge and diverse data sets and are correspondingly data-hungry.

- Language models such as GPT-5 are trained with enormous data sets from the Internet, including a large part of the publicly accessible Internet (e.g. Common Crawl), books and Wikipedia. This involves hundreds of terabytes of data.

- Image models are trained with billions of image-text pairs (e.g. the LAION-5B dataset).

The training itself requires thousands of specialized processors (GPUs), which often run continuously for several months. For example, the training of Apertus on the Alps supercomputer at the CSCS in Lugano required a total of around 6 million GPU hours. The energy consumption amounted to around 5 gigawatt hours (GWh). This would be enough to supply around 1,200 to 1,400 family households with electricity for an entire year.

Instead of just reading about it, you can now use the result directly and easily. We offer you free chat access to Apertus via our Pantobot AI platform. Try it out now!