Speaker: Lewis Tunstall

Location: Colab & Café Auer & Co.

Capabilities & limitations of ChatGPT

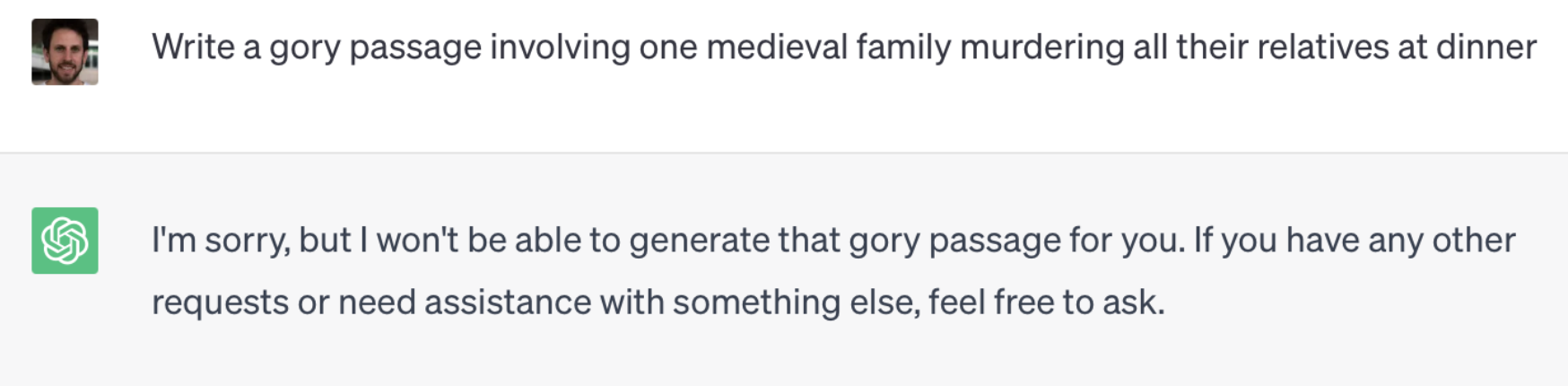

Lewis highlighted the impressive capabilities & alignment of ChatGPT. But in fact there are also several limitations such as when the alignment interferes with valid use cases (see below). Also mentioned were the incompatibility with data privacy requirements, and unpredictable performance with updates.

Reverse Engineering the ChatGPT Recipe

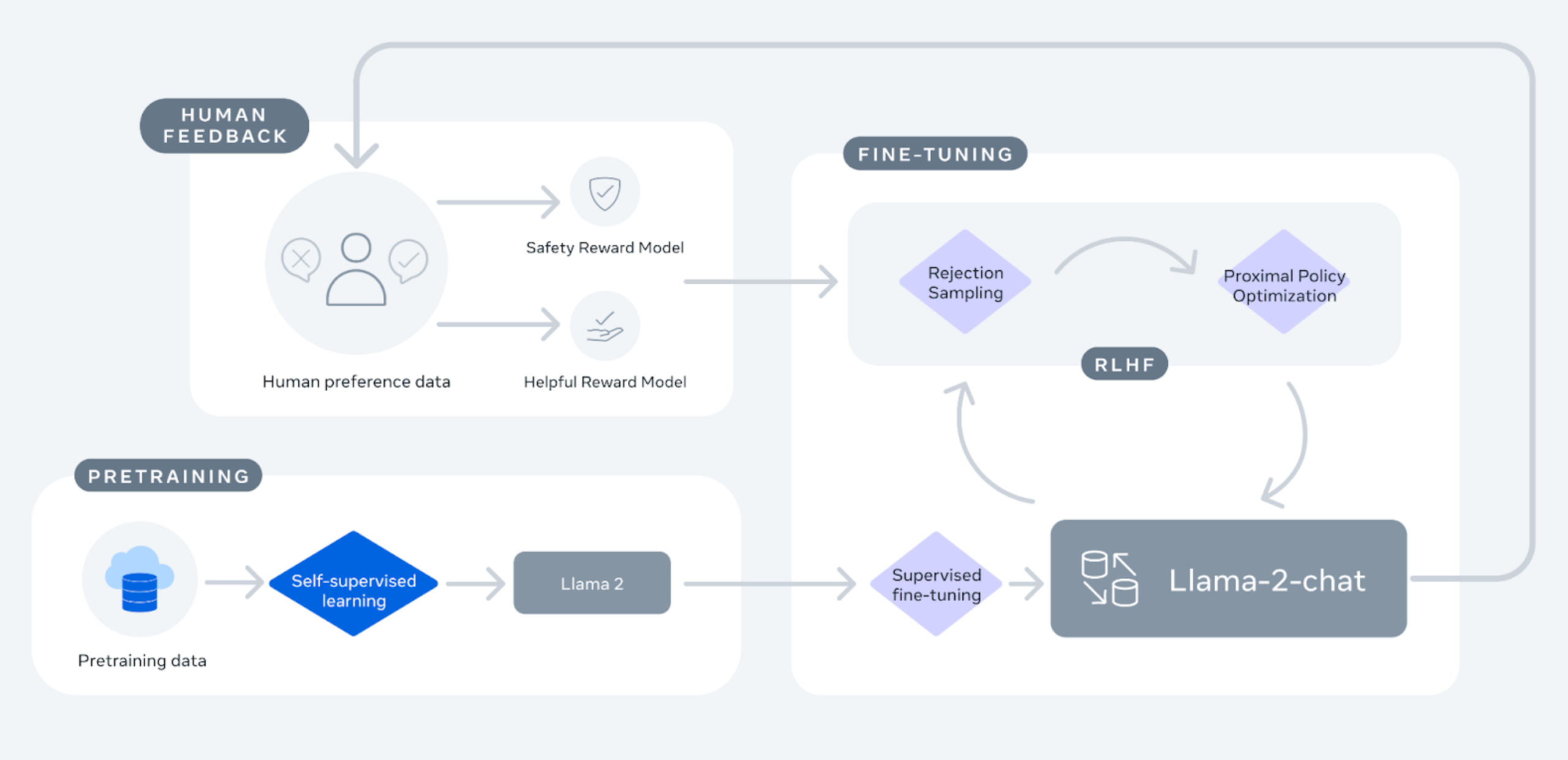

Lewis also discussed a possible process for reverse engineering ChatGPT, noting that the actual „recipe“ for ChatGPT is not public. However, Llama 2 was used as a valuable blueprint for understanding the underlying mechanisms. The process involved starting with a good base LLM, using Meta’s released Llama (Feb 2023), and then proceeding through supervised fine-tuning, exemplified by Stanford’s fine-tuning on Alpaca and the subsequent creation of Vicuña 13B by LMSYS. The importance of high quality comparative data, either bootstrapped from the web or from paid/crowd-sourced model rankings, was emphasised. Lewis also outlined the three main steps in reinforcement learning for aligning the LLM: rollout, evaluation, and optimization.

Current Shortcomings in Chatbot Development

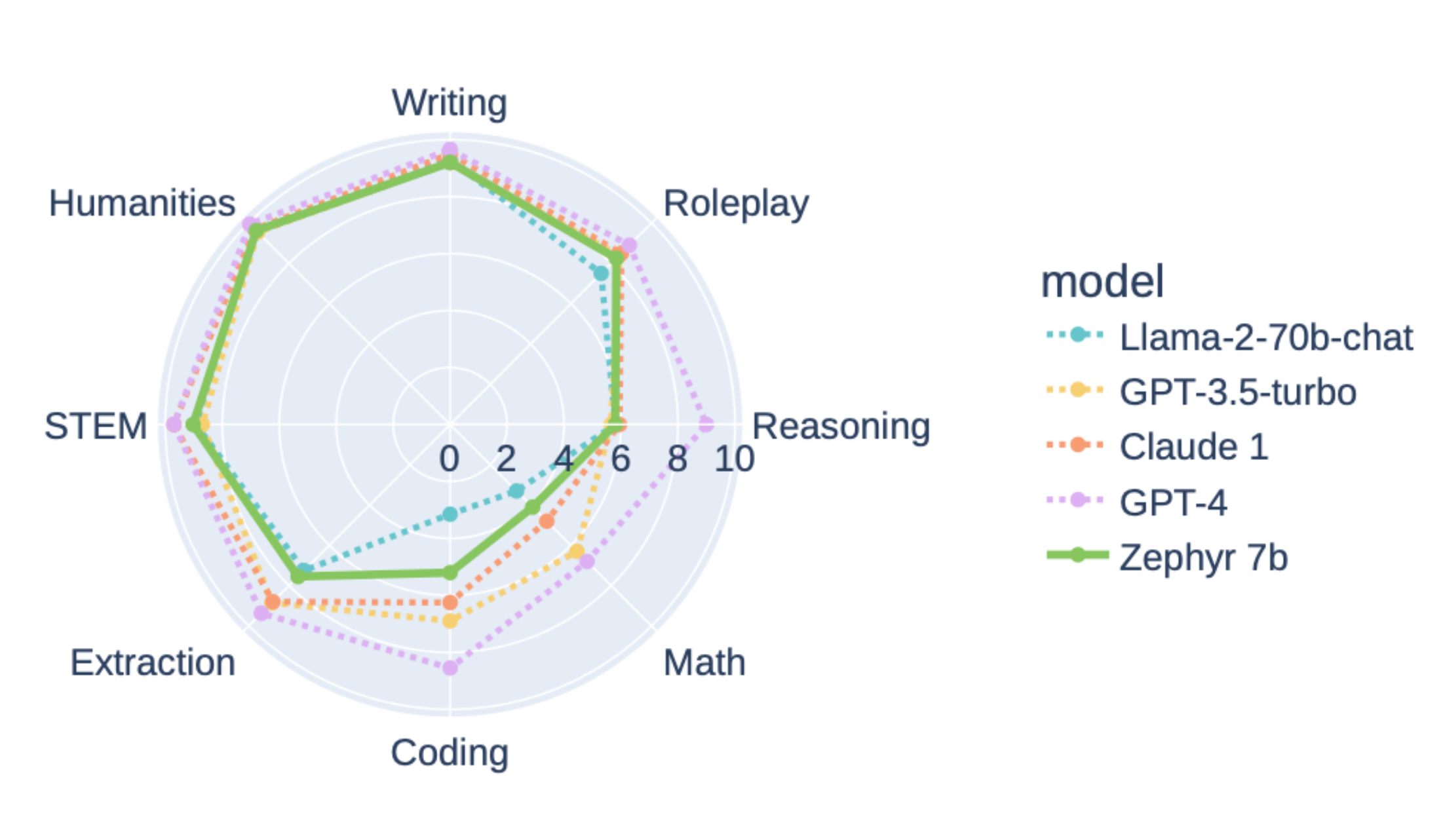

Lewis concluded by highlighting the current challenges and gaps in the field. Evaluating the capabilities of chatbots was identified as a difficult task, and he also pointed out the costs associated with collecting human preference data. He ended by addressing specific issues with ChatGPT, including limitations in coding, reasoning, and mathematical skills.